Biography

I received an M.S. from the University of Technology, Graz and a Ph.D. from the Technical University of Vienna, Austria in Applied Mathematics. I am a Research Scientist at the

Center for Automation Research at the Institute for Advanced Computer Studies at UMD. I cofounded the

Autonomy Cognition and Robotics (ARC) Lab and co-lead the

Perception and Robotics Group at UMD. I am the PI of an NSF sponsored Science of Learning Center Network for Neuromporphic Engineering and co-organize the

Neuromorphic Engineering and Cognition Workshop.

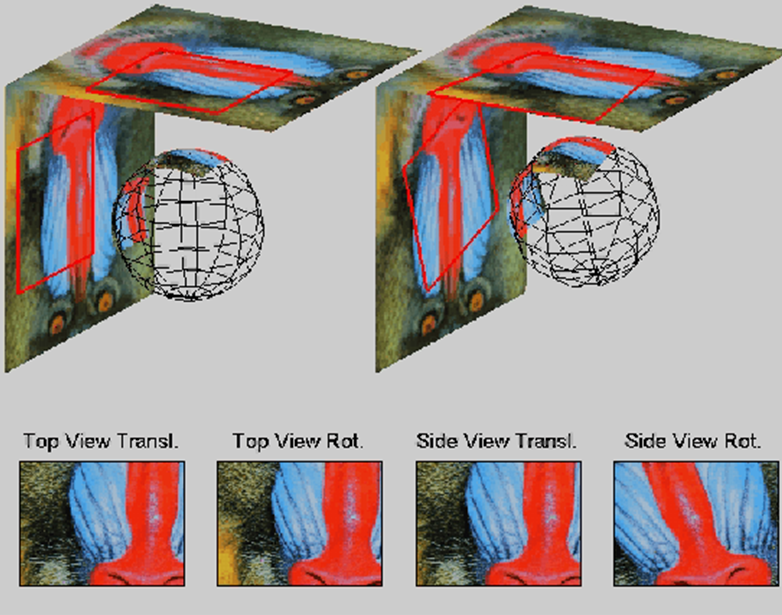

My research is in the areas of Computer Vision, Robotics, and Human Vision, focusing on biological-inspired solutions for active vision systems. I have modeled perception problems using tools from geometry, statistics and signal processing

and developed software in the areas of multiple view geometry, motion, navigation, shape, texture, and action recognition. I have also combined computational modeling with psychophysical experiments to gain insights into human motion and low-level

feature perception.

My current work is on robot vision in the following two areas:

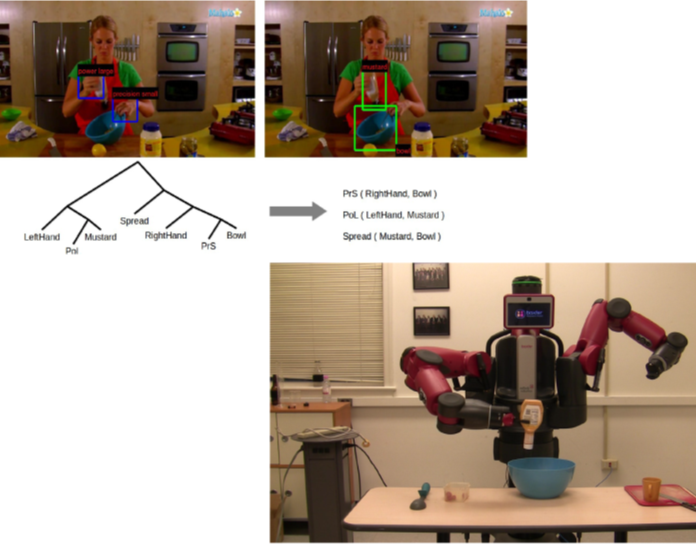

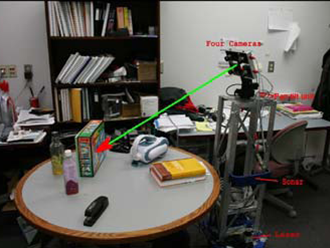

1) Integrating perception, action, and high-level reasoning to interpret human manipulation actions with the ultimate goal of advancing collaborative robots and creating robots that visually learn from humans.

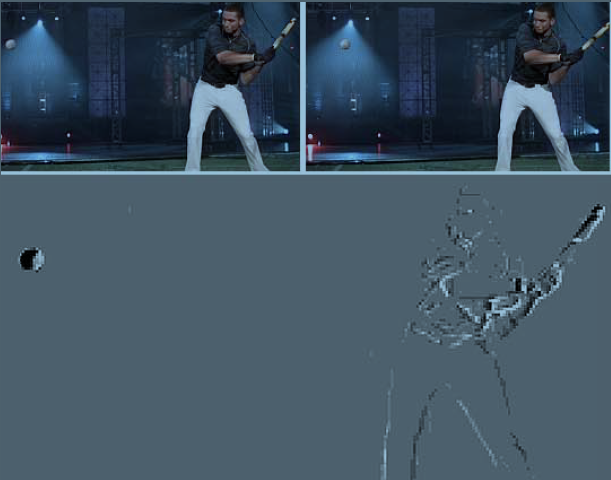

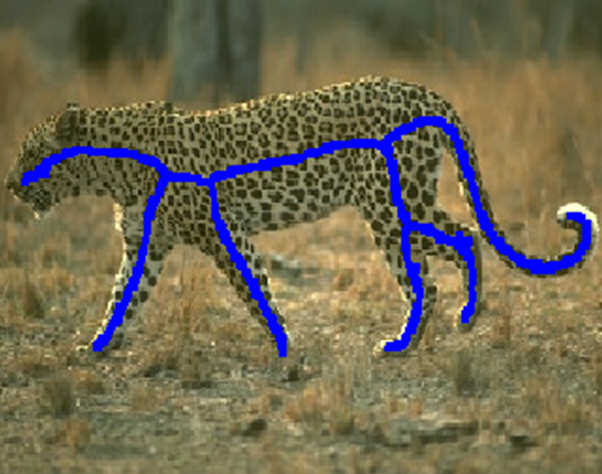

2) Motion processing for fast active robots (such as drones) using as input bio-inspired event-based sensors.