The Secret Life of a Compromised Certificate: New Evidence About the Certificate Used to Sign Pipemon

Writen by Doowon Kim, Kiran Kokilepersaud, and Tudor DumitrasPublished: June 4, 2020

Have you ever read a news article about a stolen private key associated with an Authenticode (Windows) code-signing certificate being used to sign malware and wondered why the certificate wasn’t promptly revoked? Or how much malware was signed in this way, and whether it is still causing damage?

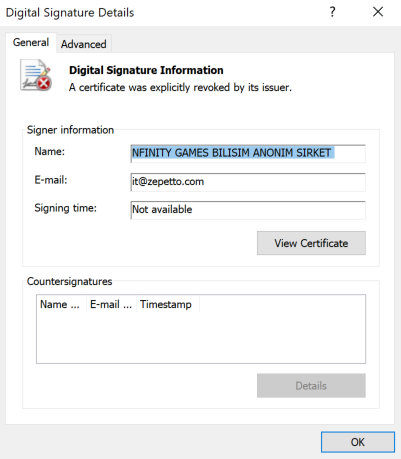

An example of such an attack was recently in the news [1], [2], [3]: cyber security firm ESET discovered a new backdoor called Pipemon, used by the Winnti group in 2020 against several video gaming companies. The backdoor was signed with a legitimate Authenticode certificate issued to Nfinity Games, a gaming company that had experienced a breach back in 2018. As part of this breach the private key associated with Nfinity’s code-signing certificate had likely been stolen, according to the news reports.

Unfortunately, it isn’t unusual to see such long revocation delays for compromised code-signing certificates. A big reason for this is the opaque nature of the code-signing Public Key Infrastructure (PKI): there is no database of the issued certificates (or even of the certificates known to be compromised), and it’s even hard to tell when a certificate has been revoked.

In our research group at the University of Maryland, we have an active project investigating the code-signing PKI. We are conducting research to understand how the opacity of this PKI gives attackers an upper-hand in abusing code-signing certificates, as in the recent Winnti attacks. Our research builds on partnerships wit h the industry and data collection efforts, which allowed us to uncover new evidence about how the Nfinity Games certificate was abused.

In this post, we explain some of the findings from our research through the lens of this new evidence. Our explanations assume a basic knowledge of how code signing works; for more background, we refer the reader to our published papers [1], [2], [3] on this topic.

Malware signed with the stolen certificate appeared as early as January 2019

Ordinarily, it is hard to determine exactly how a stolen Authenticode certificate has been abused. We must first find the binaries that were signed using the stolen certificate. These binaries are present on hosts targeted by the attacks and they cannot be found with Internet-wide scans. However, our research on the code-signing PKI allowed us to collaborate with several security companies, who collect and analyze malicious binaries. In particular, we worked with ReversingLabs to search for malware that was signed with the stolen certificate using the instances found within their dataset. This process allowed us to find 15 binaries with 10 instances of malware and 5 instances that were benign. A table with the malware binaries we found is shown below:

Signed Malware Samples

| SHA1 | Famaily | Properly Signed | First Seen |

|---|---|---|---|

| 4b90e2e2d1dea7889dc15059e11e11353fa621a6 | Pipemon | Y | 2020-05-22 |

| 23789b2c9f831e385b22942dbc22f085d62b48c7 | Pipemon | Y | 2020-05-22 |

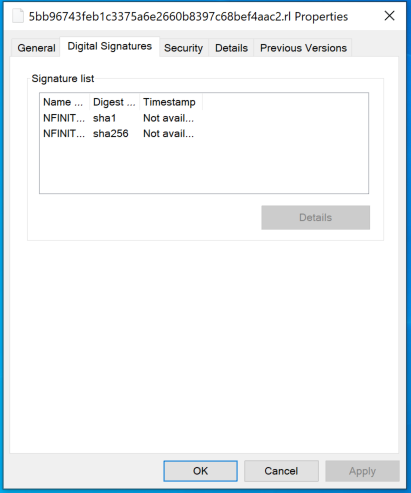

| 5bb96743feb1c3375a6e2660b8397c68bef4aac2 | Pipemon | Y | 2020-05-22 |

| af9c220d177b0b54a790c6cc135824e7c829b681 | Can | N | 2019-11-15 |

| 6b44e6b2dc9abebdba904a9443995b996c989393 | Pipemon | Y | 2020-04-16 |

| e422cc1d7b2958a59f44ee6d1b4e10b524893e9d | Pipemon | Y | 2020-05-22 |

| 751a9cbffec28b22105cdcaf073a371de255f176 | Netcat | Y | 2019-07-25 |

| 6f1b4ccd2ad5f4787ed78a7b0a304e927e7d9a3c | Agentb | Y | 2019-01-08 |

| 4a240edef042ae3ce47e8e42c2395db43190909d | PUA.Htran | Y | 2019-07-16 |

| 48230228b69d764f71a7bf8c08c85436b503109e | Frs | Y | 2019-07-15 |

This gives us new information about the timeline of the attack. The previous articles covering this story assumed that the private key for the certificate must have been stolen during an attack on Nfinity Games in 2018. This assumption is consistent with the dates in which the signed malware samples began to appear in the wild. The first one appeared on January 8th of 2019, with other ones subsequently following through 2019 and 2020. Because the earliest malware sample was correctly signed, the attackers started using the signing key at the beginning of 2019 or earlier—that is, before the attack on Nfinity was reported to the public.

More importantly, the scale of the attacks is larger than previously reported. The news articles about the use of this stolen private key, and its certificate, to sign malware have identified only the Pipemon backdoor. However, we can see from the data above that the stolen private key (associated with the certificate) was used jointly with other types of malware (Netcet, AgentB, Htran, and Frs). As these malware families were not reported in conjunction with the recent Winnti attack, it is possible that they were used against different targets, aided by the stolen certificate to stay under the radar. It is also possible that more signed malware samples are out there, beside the 10 that we have found, and their impact is unknown.

This example illustrates the difficulties in detecting malicious usage of certificates in the Code-Signing PKI. In a 2017 paper on the abuse of Authenticode certificates, we showed that stolen certificates are used by malware more widely than previously thought. For example, we found such a signed malware sample from 2003, seven years before Stuxnet brought this form of abuse to the attention of security researchers.

One malware sample has a malformed signature

The third column in the table above indicates whether the malware samples were properly signed; one was not. This means that the signature was not generated using the private key, but instead, it was simply copied over from a benign sample that was signed properly. This is called a malformed signature because the signature and the hash of the binary does not match.

This is puzzling because such malformed signatures can be created without compromising the certificate; the attacker would have needed only a properly signed binary, for instance one of the games distributed by Nfinity. This sample may have been created by a different attacker who really wanted to impersonate a gaming company, a common strategy in recent supply chain attacks, or to masquerade as the Winnti group. It is also possible that the Winnti attackers simply made a mistake when signing that malware sample.

Nevertheless, such malformed signatures are common in malware. In our 2017 paper we showed that this approach is taken by nearly one third of the “signed” malware, as it may help the malicious code evade some Anti-Virus (AV) detections.

When was the stolen certificate revoked?

The primary defense against such abuses in the PKI is revocation. Only issuing Certificate Authorities (CAs) can perform revocation on their issued certificates after discovering the certificates have become compromised. For clients, there are two methods to check the revocation status of certificates: CRL (Certificate Revocation List) and OCSP (Online Certificate Status Protocol).

If you use these methods today to check the revocation status of the Nfinity certificate, you will get a revocation date of “May 10 00:00:00 2017 GMT.” This predates the recent attacks, the new signed malware we discovered, and even the reported Nfinity breach.

Many people are confused with the revocation date in the code-signing PKI because those who are familiar with the Web PKI presumably believe that the date indicates when the revocation of the certificate was performed by the issuing CA. However, in the code-signing PKI, the date determines the validity of binary samples signed with the revoked certificate, called effective revocation date. For example, if the effective revocation date is set to May 10, 2017, all binaries signed after the date should be considered invalid while binaries signed before the date remain valid. For the compromised Nfinity certificate, all the signed malware samples (including the new ones reported here) were invalidated after the revocation was published on CRLs and OCSP. But when did this happen?

The CRLs and OCSP provide only the effective revocation date, not the revocation publication date. This is the date when the revocation was performed. For this reason, the previous news articles reported that the certificate was revoked after Nfinity was notified of the recent attack but did not provide the precise date.

To understand the effectiveness of the revocation process, we have built a collection system that periodically crawls CRLs and collects information about the revoked code-signing certificates. By comparing consecutive snapshots of a CRL, we infer the revocation publication dates for the certificates added to the CRL, which enables us to understand when revocations were performed by CAs. We utilized this system to determine that the compromised Nfinity certificate was revoked on May 6th, 2020. Before that date, all the properly signed malware samples would have been considered valid. In other words, it took 17 months to revoke the compromised certificate after the signed malware appeared in the wild in Jan. 2019.

Such long revocation delays are, unfortunately, quite common. In a 2018 paper on code-signing certificate revocations, we estimated revocations are published an average of 171.4 days after the malware signed with those certificates appears in the wild. This is another result of the opacity of the code signing PKI: it takes time until the CA and the certificate owner learn about the signed malware that proves their certificate is compromised. Our paper includes more detailed findings about the challenges, and the resulting effectiveness, of certificate revocations in the code-signing PKI. To encourage further analysis, we also released the revocation publication dates and other data that we collected in this project, at signedmalware.org.

What’s next?

This abuse of the Code-Signing PKI is not just a problem for today. Adversaries have enjoyed the benefits of malware signed with stolen private keys since 2003. This raises the question: Why is this still happening, after 17 years of abuse? The fundamental problem is the opacity of the certificate ecosystem in the Code-Signing PKI. This is in stark contrast with the situation in the Web’s PKI (i.e., TLS), where researchers are able to collect TLS certificates by scanning the IPv4 address space or the domains registered. This transparency and easy data collection have helped academic and industry researchers to understand the weaknesses of the Web’s PKI and to propose new techniques (e.g., CRLite, Certificate Transparency) that ultimately improved the security of the ecosystem.

A more important question is: How can we make the code-signing PKI transparent and improve the security of this PKI despite its inherent opacity? In our research we made a first step in this direction, but we don’t have a panacea for the problems in the PKI. However, we believe that the most practical solution would be collaboration. In the code-signing PKI, researchers can’t successfully conduct research without shared datasets from security companies and other research groups. When working together, it becomes possible to shed greater transparency on the code-signing PKI. Our research would not have been possible without the help of collaborators from the security industry, Certificate Authorities, and investigative journalists.

We therefore encourage security practitioners to collaborate with academic researchers in the code-signing PKI. This will allow our community to measure and better understand the current security problems in the PKI, and ultimately learn how to improve the system in the future.