To understand security threats, and our ability to defend against them, an important question is Can we patch vulnerabilities faster than attackers can exploit them? (to quote Bruce Schneier). When asking this question, people usually think about creating patches for known vulnerabilities before exploits can be developed or discovering vulnerabilities before they can be targeted in zero-day attacks. However, another race may have an even bigger impact on security: once a patch is released, is must also be deployed on all the hosts running the vulnerable software before the vulnerability is exploited in the wild. There is some evidence that exploits are able to find sizable populations of vulnerable hosts: the Internet worms from 2001—2004 (e.g. Code Red, Slammer, Witty) propagated by exploiting known vulnerabilities, yet they were able to infect between 12K—359K hosts.

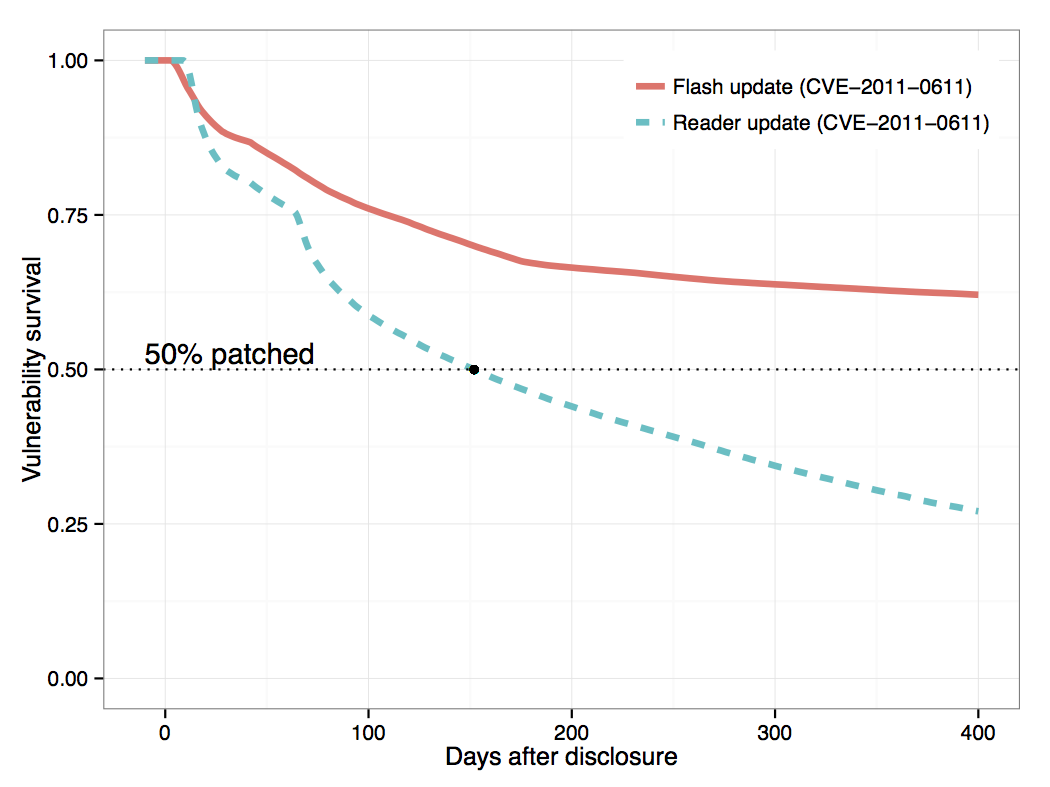

It turns out that it may not be that easy to patch vulnerabilities completely. Using WINE, we analyzed the patch deployment process for 1,593 vulnerabilities from 10 Windows client applications, on 8.4 million hosts worldwide [Oakland 2015]. We found that a host may be affected by multiple instances of the same vulnerability, because the vulnerable program is installed in several directories or because the vulnerability is in a shared library distributed with several applications. For example, CVE-2011-0611 affected both the Adobe Flash Player and Adobe Reader (Reader includes a library for playing .swf objects embedded in a PDF). Because updates for the two products were distributed using different channels, the vulnerable host population decreased at different rates, as illustrated in the figure on the left. For Reader patching started 9 days after disclosure (after patch for CVE-2011-0611 was bundled with another patch in a new Reader release), and the update reached 50% of the vulnerable hosts after 152 days. For Flash patching started earlier, 3 days after disclosure, but the patching rate soon dropped (a second patching wave, suggested by the inflection in the curve after 43 days, eventually subsided as well). Perhaps for this reason, CVE-2011-0611 was frequently targeted by exploits in 2011, using both the .swf and PDF vectors.

Now, copying and pasting source code (i.e. creating code clones) is known to be a bad programming practice, because it breaks modularity, it results in code that is difficult to maintain and, when a vulnerability is discovered, it is challenging to patch all the copies of the vulnerable code. However, the sort of code sharing illustrated by the previous example is generally considered ok, as the common code is contained in a shared library. Each application is updated along with all the libraries it depends upon, in order to avoid incompatibilities and broken dependencies when installing software updates. Distributing patches for the shared library through separate channels, one for each application, reduces the risks of destabilizing the deployment environment; at the same time, this means that some instances of the vulnerability may remain unpatched for a while (and, worse, the owners of the vulnerable hosts may incorrectly believe that they have fixed the vulnerability). We also found multiple versions of the same application installed on the same host; for example, device drivers such as printers often install a (usually outdated) version of Adobe Reader, to allow the user to read the manual. Such inactive applications, which are forgotten but remain installed, present an important security threat, as attackers may be able to invoke the inactive versions (we describe two such attacks in the paper). In general, we found that the time between patch releases in the different applications affected by the same vulnerability is up to 118 days (with a median of 11 days), and, as the patching rates vary widely among applications and application versions, many hosts patch only one instance of the vulnerability.

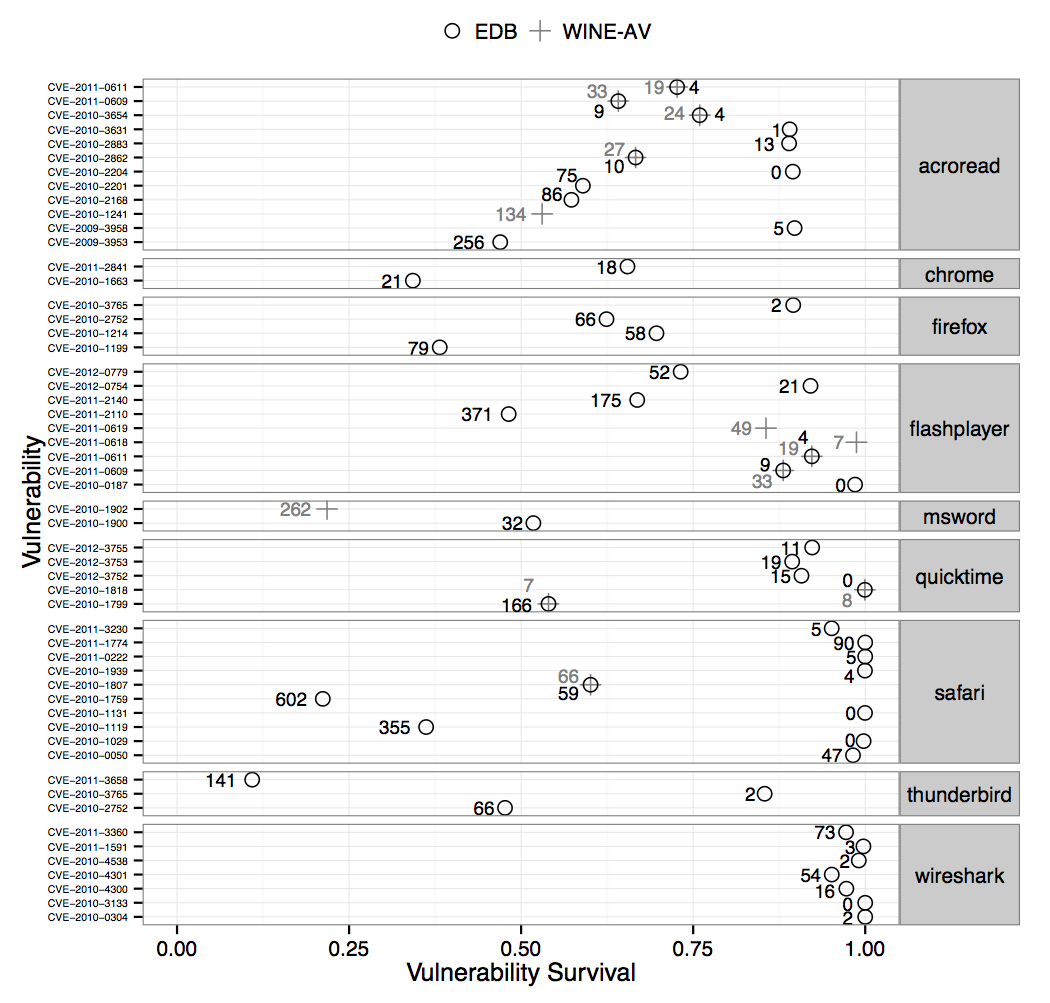

We also wondered what percentage of the susceptible host population remains vulnerable when exploits are released in the wild. This percentage is, obviously, 100% for zero-day attacks (which exploit unknown vulnerabilities that do not have patches available), but not every exploit is a zero-day exploit. Among the vulnerabilities in our study, we found 13 that had exploits active in the wild and 50 that have an exploit release date recorded in Exploit DB. For each of the 54 vulnerabilities in the union of these two sets, we extracted the earliest record of the exploit in each of the two databases and we determined the vulnerability survival level (the percentage of hosts that remained vulnerable) on that date. The next figure illustrates these vulnerability survival levels: on the right of the X-axis 100% of hosts remain vulnerable, and on the left the vulnerability is completely patched. Each vulnerability is annotated with the number of days after disclosure when we observed the first record of the exploit. This exploitation lag is overestimated because exploits are not detected immediately after they are released; therefore, these vulnerability survival levels must be interpreted as lower bounds. Between 11% and 100% of the hosts remained vulnerable when the exploits became available, and the median survival rate was 86%. In other words, the median fraction of hosts patched when exploits are released is at most 14%.

These results illustrate the opportunity for exploitation, rather than a measurement of successful attacks. Even if vulnerabilities remain unpatched, end-hosts may employ other defenses against exploitation, such as anti-virus and intrusion-detection products or mechanisms like data execution prevention (DEP), address space layout randomization (ASLR), or sandboxing. Our findings reflect the effectiveness of vulnerability patching, by itself, in defending hosts from exploits. In particular, we cannot always assume that our computers become immune to exploits of a given vulnerability after installing the patch: vulnerable hosts may only patch one of the program installations and remain vulnerable, while patched hosts may later re-join the vulnerable population if an old version or a new application with the old code is installed. It also seems that vulnerability exploits can be very effective, even if they are not zero-day exploits. More importantly, these results highlight a subtle conflict between the reliability and security goals of software updating mechanisms, which must minimize the window of exposure to vulnerability exploits while reducing the risk of breaking dependencies in the deployment environment.

Paper: [Oakland 2015] [Explore the data] [Clean vulnerability data set]

Presentation:

References

[Oakland 2015] A. Nappa, R. Johnson, L. Bilge, J. Caballero, and T. Dumitraș, “The Attack of the Clones: A Study of the Impact of Shared Code on Vulnerability Patching,” in IEEE Symposium on Security and Privacy, San Jose, CA, 2015.

PDF