Prediction of Manipulation Actions

|

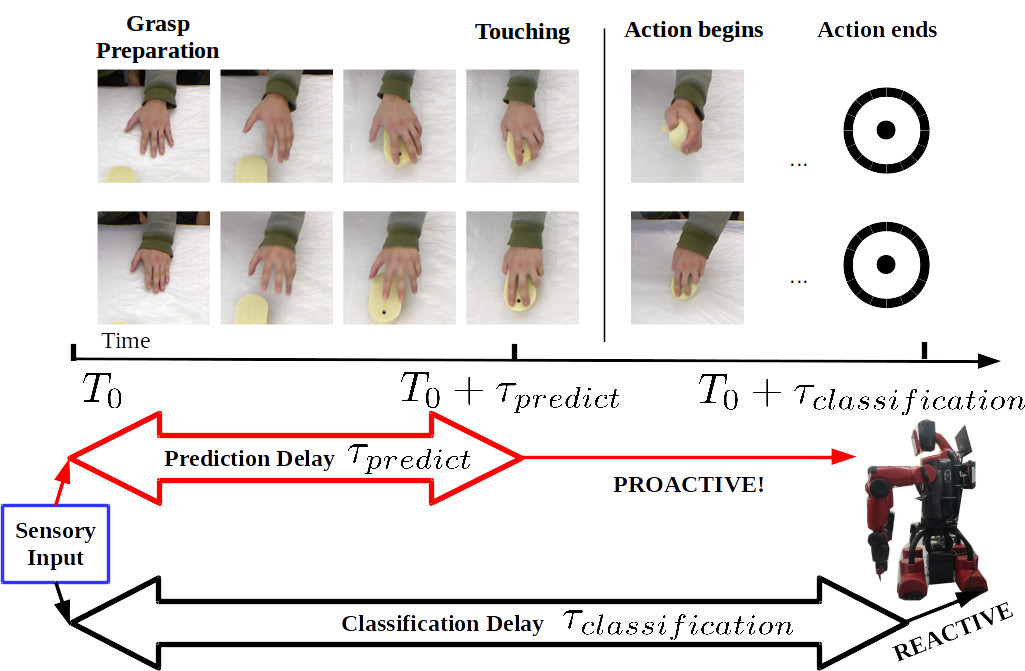

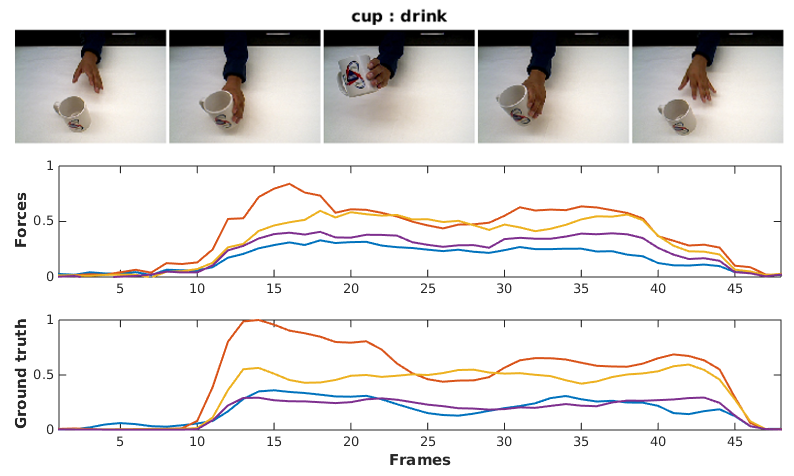

Looking at a person’s hands one often can tell what the person is going to do next, how his/her hands are moving and where they will be, because an actor’s intentions shape his/her movement kinematics during action execution. Similarly, active systems with real-time constraints must not simply rely on passive video-segment classification, but they have to continuously update their estimates and predict future actions. In this paper, we study the prediction of dexterous actions. We recorded from subjects performing different manipulation actions on the same object, such as "squeezing", "flipping", "washing", "wiping" and "scratching" with a sponge. In psychophysical experiments we evaluated human observers' skills in predicting actions from video sequences of different length, depicting the hand movement in the preparation and execution of actions before and after contact with the object. We then developed a recurrent neural network based method for action prediction using as input patches around the hand. We also used the same formalism to predict the forces on the finger tips using for training synchronized video and force data streams. Evaluations on two new datasets show that our system closely matches human performance in the recognition task, and demonstrate the ability of our algorithms to predict real-time what and how a dexterous action is performed.

Realtime action prediction

Downloads

Manipulation Action Dataset (MAD)

To repeat our experiments, we provide the preprocessed hand patch features with action labels.mad-precalc-features.tar.gz

mad-meta-data.tar.gz

The raw image frames can be downloaded seperately for each subject.

| Subjects | and | fer | gui | kos | mic |

| Frames | and_rgb.tar.gz | fer_rgb.tar.gz | gui_rgb.tar.gz | kos_rgb.tar.gz | mic_rgb.tar.gz |

Hand Action with Force Dataset (HAF)

For the HAF dataset, we also provide the preprocessed hand patch features with action labels, plus the corresponding force data.haf-precalc-features.tar.gz

haf-meta-data.tar.gz

The raw image frames can be downloaded seperately for each subject.

| Subjects | fbr | fbr2 | fm | leo | yz |

| Frames | fbr.tar.gz | fbr2.tar.gz | fm.tar.gz | leo.tar.gz | yz.tar.gz |

Code and Models

We release our code for training the action prediction model (action_prediction_code.zip), a brief instruction can be found in the README.

For the users convinence, we also release the pretrained model for both action prediction and the hand force estimation. Please unzip the files in the "model" folder to test the models.

For the users convinence, we also release the pretrained model for both action prediction and the hand force estimation. Please unzip the files in the "model" folder to test the models.

- action prediction (action_prediction_model.zip)

- hand force estimation (force_estimation_model.zip)

Reference

Full paper [pdf]@article{action_prediction_2016,

title={Prediction of Manipulation Actions},

author={Cornelia Fermüller, Fang Wang, Yezhou Yang, Konstantinos Zampogiannis, Yi Zhang, Francisco Barranco and Michael Pfeiffer},

eprint = {arXiv:1610.00759},

year={2016},

}